Artly AI is a US-based technology company developing embodied AI systems that replicate human-level dexterity for the service industry. The company's Barista Bot doesn't simply dispense coffee, it executes the complex, fluid motions of an expert barista, from precisely controlled pours to the subtle wrist movements required for latte art.

Robots struggle to perform real-world manipulation tasks that require human-like motion, such as grasping objects, pouring liquids, or executing fine motor control. Unlike large language models, which are trained on massive public datasets, robots lack a shared, scalable library of movement data, making it difficult to learn the nuanced manipulation skills required for real-world tasks.

Artly AI addresses this challenge through its Trade School for Robots framework, built on patented foundation models for robot actions. The system enables robots to learn new skills through human demonstration, significantly reducing training data requirements while accelerating skill acquisition.

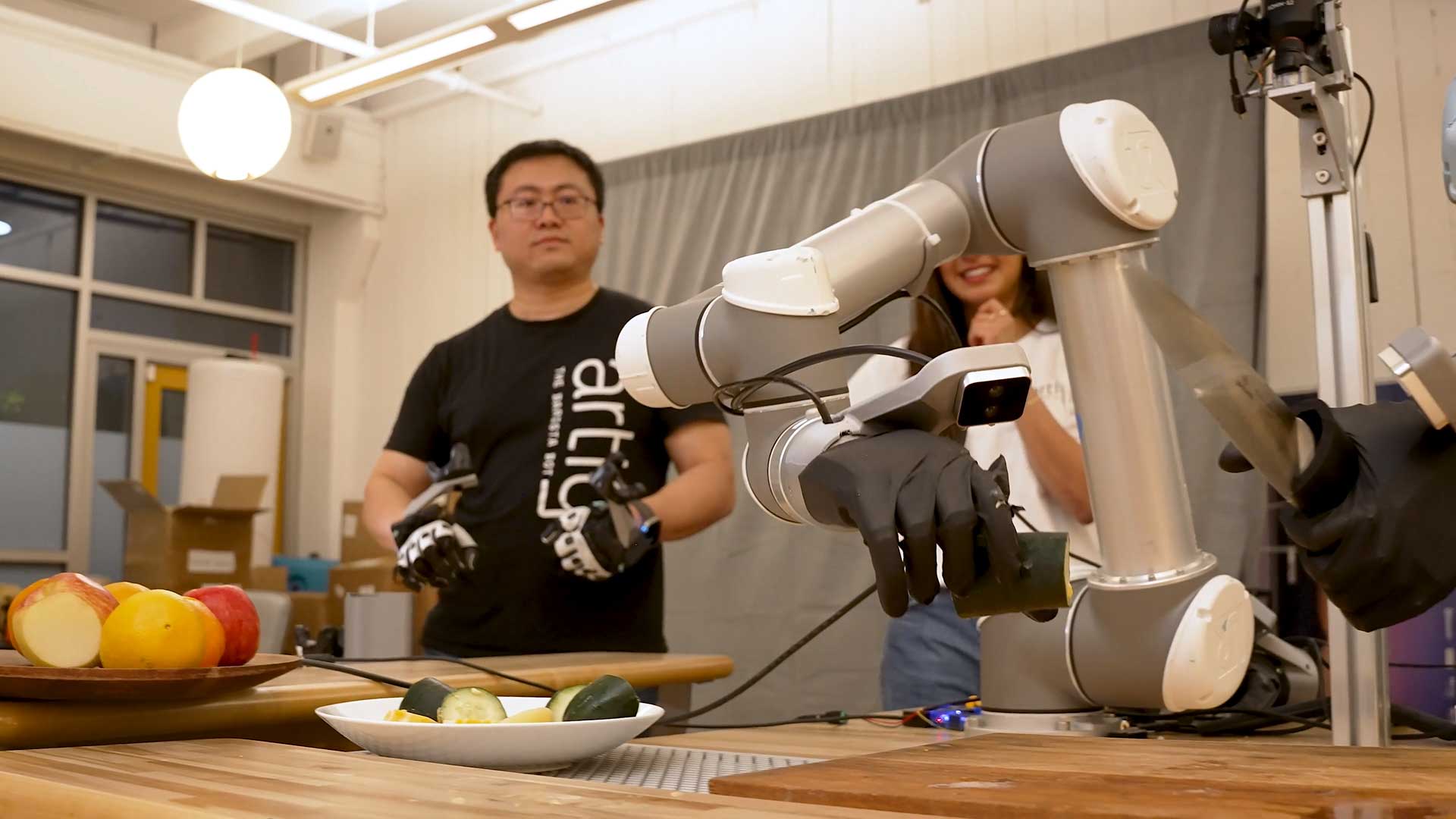

Artly AI’s Trade School for Robots is a training system in which AI learns craftsmanship through human observation. At its core is imitation learning, where Artly records the movements of world-class professionals using motion capture technology. In this setup, a teacher, such as a champion barista, wears MANUS data gloves to perform tasks, while the student, the AI system, captures precise motion trajectories, including the subtle shakes and tilts required for high-end latte art.

This learning process is supported by Artly’s AI platform, which acts as a classroom that allows skills to scale globally. For example, once a robot in Seattle learns to prepare a new seasonal drink, that knowledge can be instantly deployed to robots in New York or San Francisco. To graduate, the robot must use computer vision and physical reasoning to interact with the real world. Because it is trained on human movement rather than fixed coordinates, the robot can handle real-world variations, such as a different-sized milk pitcher or a heavier bag of coffee beans, without breaking its programming.

With 25 degrees of freedom and high-precision full anatomical hand tracking, MANUS data gloves capture human demonstrations with millimeter-level positional and rotational accuracy, ensuring the original motion remains intact and unaltered. This high-fidelity data enables learning models to extract fine-grained manipulation primitives, supporting accurate human-to-robot skill transfer and improved generalization across tasks.

By combining demonstration-based learning with high-fidelity motion capture from MANUS data gloves, robots can learn complex manipulation skills in hours instead of weeks. This approach has enabled reliable, revenue-generating deployments in real-world environments while significantly reducing training cost and complexity.

As more robots learn through demonstration, each new skill strengthens a shared, reusable skill library, creating a compounding data advantage. This vision moves physical AI beyond isolated automation toward a scalable future in which robots continuously learn from humans and work alongside them in the real world.