Dexterous manipulation remains one of robotics’ most challenging frontiers. While vision-language-action models show promise for generalist robot capabilities, they face a critical bottleneck: the scarcity of large-scale, action-annotated data for dexterous skills. Traditional teleoperation methods are costly and time-intensive, while existing human motion datasets suffer from viewpoint dependency, occlusion, and restricted capture environments that limit their utility for robot training.

Researchers at Peking University and the Beijing Academy of Artificial Intelligence developed RoboBrain-Dex, a breakthrough vision-language-action model for dexterous manipulation, by leveraging MANUS data gloves to overcome these data collection challenges. Their work demonstrates how high-fidelity hand tracking enables the creation of large-scale, multi-source egocentric datasets that bridge human and robotic manipulation.

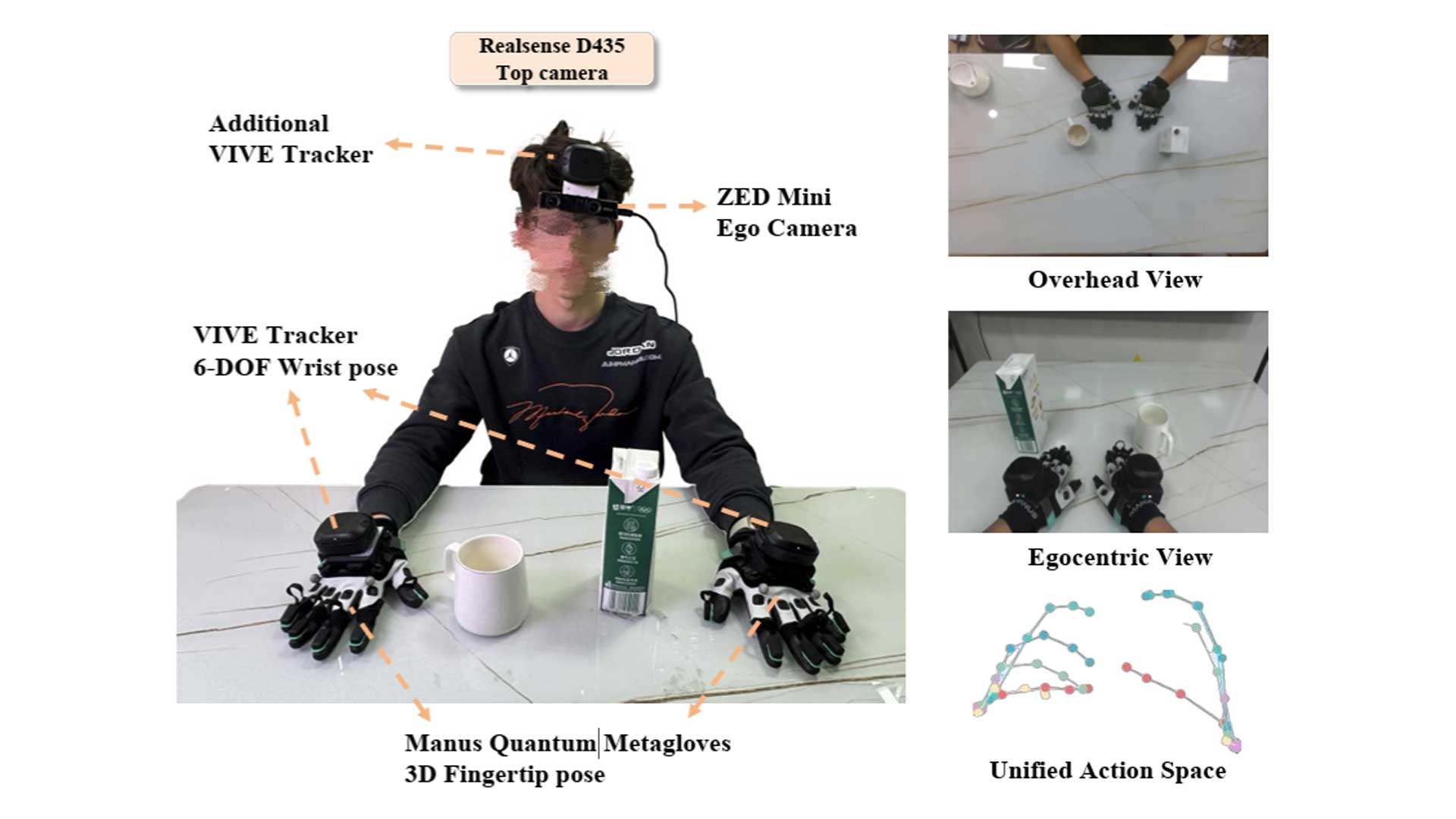

The RoboBrain-Dex research team constructed EgoAtlas, a comprehensive multi-source egocentric dataset integrating human and robotic manipulation data under a unified action space. At the core of their data collection infrastructure: MANUS Quantum Metagloves capturing precise 3D positions for all 25 hand keypoints per hand.

Unlike camera-based or VR tracking systems constrained by capture volume and occlusion issues, the MANUS glove-tracker system enabled portable, anytime-anywhere motion capture. Combined with VIVE Trackers for 6-DoF wrist pose, the system provided global hand localization while maintaining fingertip-level precision. This approach eliminated viewpoint dependency and enabled data collection across diverse real-world environments, critical for building datasets with the scale and variety needed for robust VLA model training.

The high-fidelity motion data captured by MANUS gloves served dual purposes in the RoboBrain-Dex pipeline. For human demonstrations, the gloves recorded natural manipulation behaviors that provided rich priors for learning robotic actions. For robot teleoperation, the same glove-tracker system enabled precise control: wrist poses converted via inverse kinematics to robot arm configurations, while fingertip trajectories mapped to dexterous hand joint space through IK-based retargeting.

This seamless human-to-robot translation was critical for collecting the robot demonstration data that complemented RoboBrain-Dex's human dataset. The researchers successfully deployed this teleoperation approach on a Unitree G1 humanoid robot equipped with Inspire 6-DoF dexterous hands, collecting high-quality demonstrations across diverse manipulation tasks.

Built on the multi-source egocentric data enabled by MANUS gloves, RoboBrain-Dex achieved the highest average success rate across six real-world dexterous manipulation tasks. The model demonstrated exceptional generalization to out-of-distribution scenarios, validating the power of training on diverse, high-fidelity human and robot data unified under consistent action representations.

The RoboBrain-Dex work represents a significant step toward generalist models for dexterous manipulation made possible by data collection infrastructure that combines MANUS’s millimeter-level hand tracking accuracy with portable, scalable deployment. As embodied AI continues advancing toward human-level manipulation capabilities, high-fidelity egocentric data collection remains foundational to bridging the gap between human dexterity and robotic intelligence.