Scaling vision-language-action (VLA) policies to bimanual robots with high degree-of-freedom dexterous hands presents a fundamental data challenge. The ByteDexter V2 hand features 21 DoFs per hand, creating a 56-DoF bimanual system where traditional teleoperation approaches struggle. As ByteDance Seed researchers note in their GR-Dexter technical report, the difficulty compounds significantly when each end-effector is a multi-fingered anthropomorphic hand requiring precise, coordinated control.

For VLA-based generalist manipulation to succeed at this scale, researchers need high-quality demonstration data capturing complex hand-object interactions. The core problem: how to efficiently collect naturalistic bimanual dexterous trajectories that preserve the fine-grained finger kinematics essential for training robust manipulation policies.

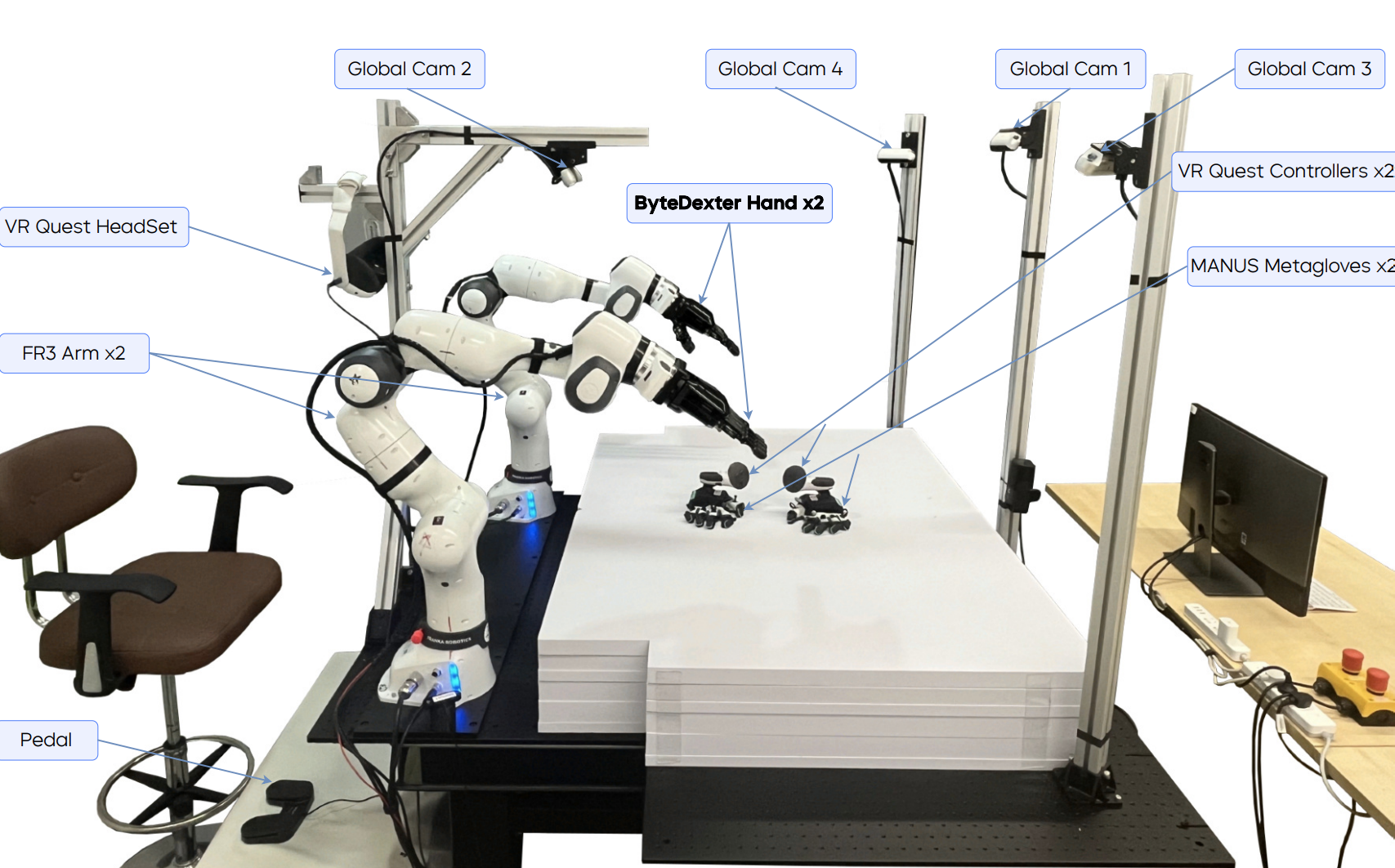

ByteDance Seed addressed this teleoperation challenge by implementing MANUS Metagloves as the primary hand-motion interface in their data collection pipeline. The system combines a Meta Quest VR headset for wrist pose tracking with MANUS gloves for capturing detailed finger movements, with Quest controllers mounted on the glove dorsums to improve coordinated wrist-hand tracking reliability.

This configuration enables teleoperators to simultaneously control two Franka Research 3 arms equipped with ByteDexter V2 hands during long-horizon manipulation tasks. Human hand motions captured by the MANUS gloves are retargeted in real time to joint position commands through a whole-body controller. The hand-motion retargeting is formulated as a constrained optimization problem combining wrist-to-fingertip and thumb-to-fingertip alignment terms with collision-avoidance constraints, solved via Sequential Quadratic Programming.

The teleoperation system demonstrate reliability across diverse tasks from coarse manipulation like building blocks to fine motor tasks including knitting and calligraphy. After minimal training, teleoperators successfully completed long-duration tasks, enabling collection of approximately 20 hours of high-fidelity robot trajectories for both the makeup table decluttering and generalizable pick-and-place experiments.

The MANUS-based teleoperation pipeline proved critical not just for collecting on-robot demonstrations, but for the broader data strategy underpinning GR-Dexter's generalization capabilities. The anthropomorphic correspondence between human hands and the ByteDexter V2 design created a promising path for leveraging large-scale egocentric hand-object interaction datasets.

GR-Dexter's training recipe combines teleoperated robot trajectories with vision-language data, cross-embodiment demonstrations, and over 800 hours of human trajectory data collected via VR devices. The fingertip-centric retargeting approach used in processing MANUS glove data preserves task-relevant contact geometry while remaining agnostic to joint-level discrepancies across embodiments. This alignment strategy enabled seamless integration of heterogeneous data sources into a unified training corpus.

ByteDance Seed's implementation demonstrates that combining high-fidelity hand tracking with anthropomorphic dexterous hardware creates an effective teleoperation interface for bimanual manipulation research. The MANUS gloves enabled efficient collection of the demonstration data essential for training VLA policies on high-DoF systems. The structural similarity between human and robot hands facilitated cross-embodiment transfer, a key advantage for scaling dexterous manipulation capabilities beyond gripper-based systems.