The MIT.nano Immersion Lab is MIT's multidisciplinary space designed to visualize complex data and prototype immersive technologies. It supports AR and VR research, motion capture, and digital–physical interaction for users across science, engineering, and the arts.

Modern neurosurgical techniques demand extreme precision, especially in pediatric hydrocephalus surgery. For years, young surgeons had to travel across the world to learn from experts like Dr. Benjamin Warf at Boston Children’s Hospital. These procedures are rare and delicate, and the skills are traditionally passed down only through direct mentorship.

To break this barrier, pediatric neurosurgeon Giselle Coelho founded EDUCSIM and partnered with MIT.nano’s Immersion Lab. Together, they created a volumetric VR avatar of Dr. Warf, allowing residents to learn from him remotely as if he were standing beside them.

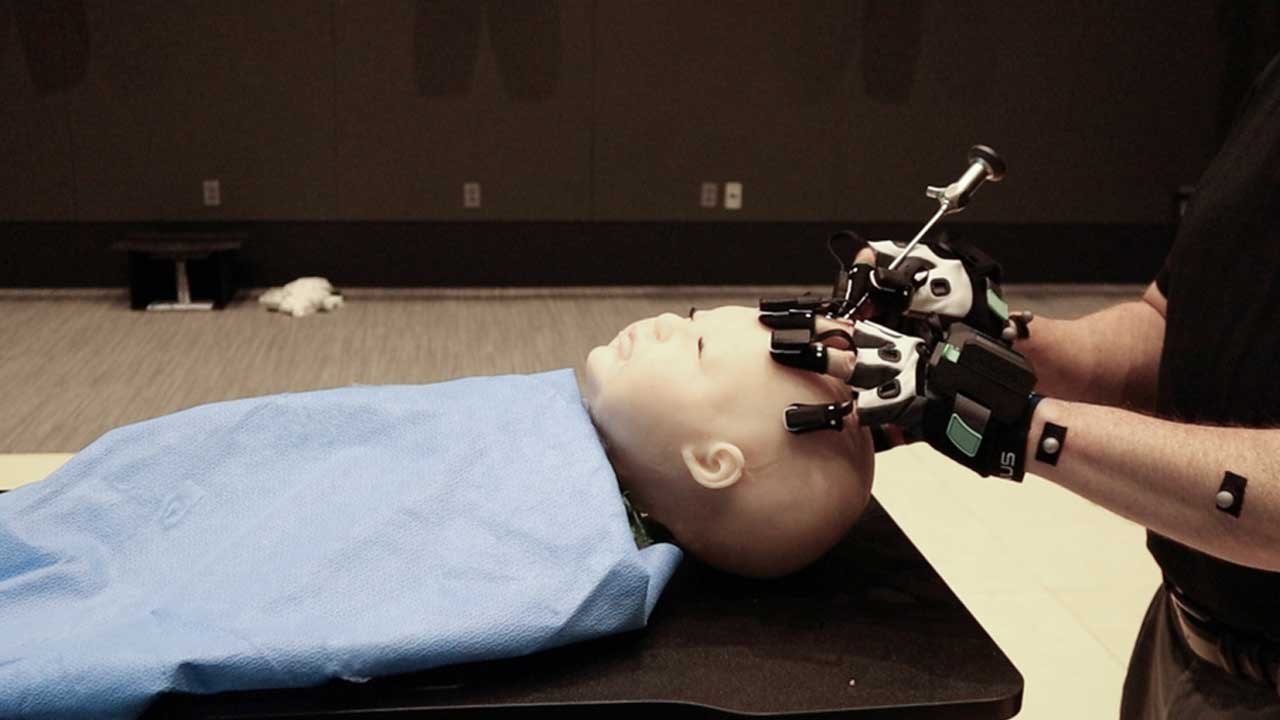

Warf visited MIT.nano on several occasions to be digitally “captured,” including performing an operation on the physical baby model while wearing MANUS Quantum Metagloves and clothing embedded with sensors. Here’s how Quantum Metagloves addressed the challenges:

MANUS gloves provide high-precision sensor data, capturing joint positions, rotations, and angles in real time. This allows the digital model to preserve the exact details of Warf’s technique, from how he stabilizes instruments to how he performs delicate surgical tasks, rather than reducing hand movement to broad gestures. The resulting finger-level data becomes a precise training reference that residents can observe and replicate.

MANUS gloves are compatible with VR environments and can support workflows in surgical simulators and mixed-reality training systems. By synchronizing positional tracking, body posture, and hand-motion data, MANUS enables the surgeon to appear as a fully realized digital twin in immersive environments. Trainees can observe technique from multiple angles, receive real-time guidance, and experience spatial presence rather than static demonstrations.

“These technologies have mostly been used for entertainment or VFX or CGI. But we’re applying it now for real medical practice and real learning.”

— Talis Reks, AR/VR technologist, MIT.nano Immersion Lab

The project with Warf and EDUCSIM enables residents over 3,000 miles away in Brazil to observe and interact with the avatar of a world-class neurosurgeon, and to practice delicate surgery on a realistic baby brain model.

“If one day we could have avatars, like this one from Giselle, in remote places showing people how to do things and answering questions without the cost or time of travel, I think it could be really powerful,” says Warf, who has spent years training pediatric neurosurgeons worldwide.

Looking ahead, the combination of MANUS gloves’ precision hand-motion capture and VR/AR environments can support broader applications such as remote surgical mentoring, teleoperated robotics, industrial skill transfer, and global training workflows.