China’s National and Local Co-Built Humanoid Robotics Innovation Center has launched the Baihu Data Hub, an open-source platform providing high-fidelity human-motion datasets to accelerate humanoid robotics. Its flagship Qinglong robot is China’s first open-source general-purpose humanoid. Unveiled in 2024, Qinglong collects and demonstrates skill-acquisition data using embodied AI and collaborative annotation.

Training humanoid robots requires massive datasets of human-like manipulation. But the most valuable data—precisely labeled hand-object interactions—is also the hardest to obtain:

This data bottleneck slows progress toward robots with human-level dexterity.

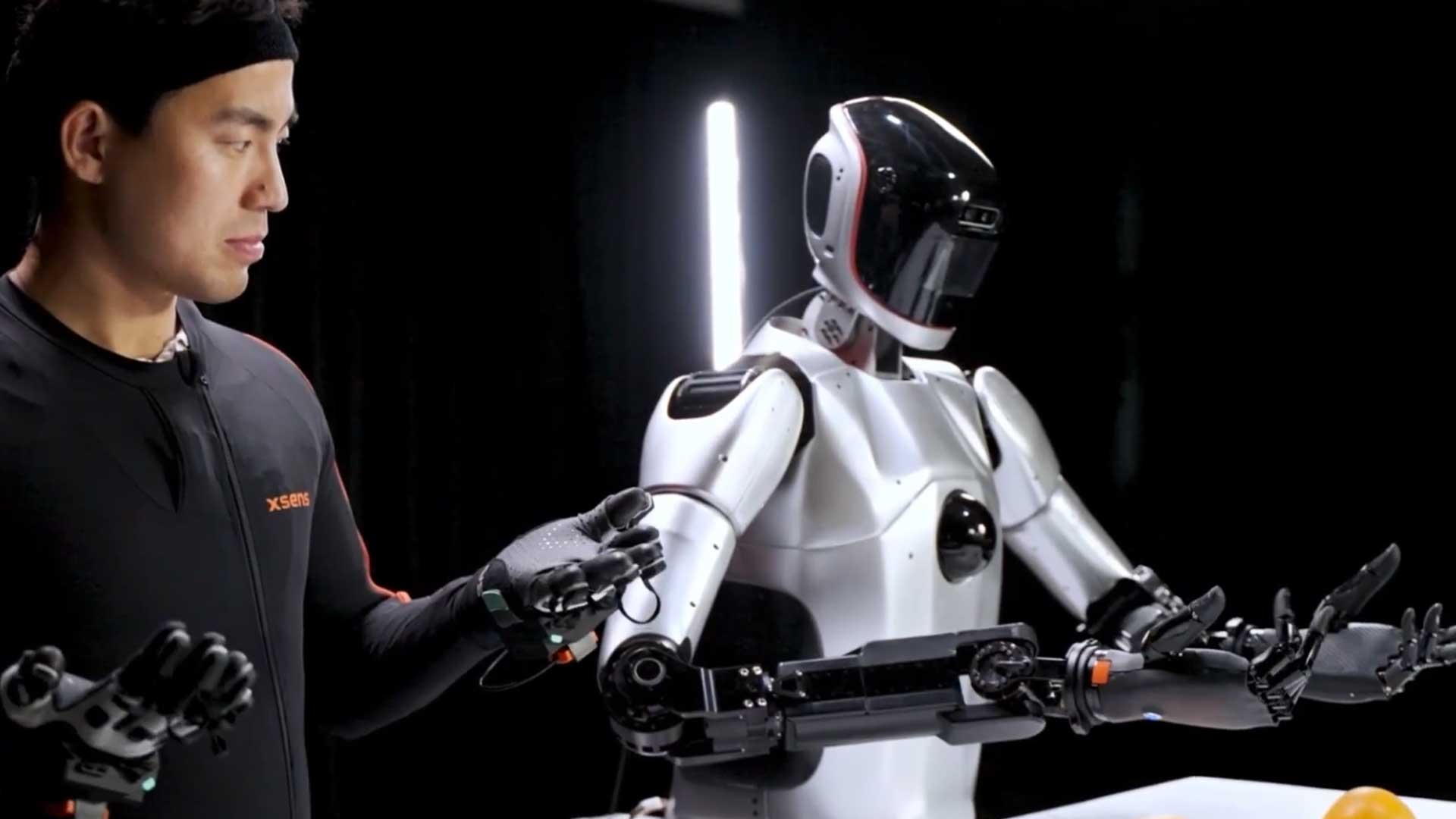

To overcome the data bottleneck, China’s first open-source humanoid, Qinglong, integrates MANUS Quantum Metagloves with full-body motion capture.

These capabilities create the rare, high-quality datasets needed for embodied AI.

Robots learn fine motor skills by breaking complex tasks into small, labeled micro-actions. Take plugging in a cable as an example. The robot performs a sequence of hand-object interactions to plug a power cord into an outlet, consisting of grasping, aligning, and inserting motions.

These micro-movements form a rich dataset of hand–object interactions for imitation and reinforcement learning.

Captured and labeled micro-movements flow through a structured AI process:

This staged approach transforms motion data into human-level dexterity.